This is a summary for paper "NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis".

Keywords: scene representation, view synthesis, image-based rendering, volume rendering, 3D deep learning

A brief understanding: How to train a network for NeRF

Training a neural network for NeRF (Neural Radiance Fields) involves several steps, including data preparation, network architecture design, training, and evaluation.

- Data preparation: The first step is to prepare the data that will be used to train the neural network. This typically involves capturing a set of 3D scans of the object or environment being represented, and labeling the data with the corresponding colors that should be associated with each point in the 3D space.

- Network architecture design: The next step is to design the architecture of the neural network that will be used to represent the object or environment. This typically involves defining the number and types of layers in the network, as well as the size and shape of the network.

- Training: Once the network architecture has been designed, the next step is to train the network using the prepared data. This involves feeding the data into the network and adjusting the weights of the network over multiple iterations, or epochs, to optimize the performance of the network.

- Evaluation: After the network has been trained, it is typically evaluated on a separate set of data to measure its performance and ensure that it is generating accurate results. This can involve comparing the output of the network to the ground truth data, as well as using visualization techniques to compare the rendered images produced by the network to actual photographs of the object or environment.

Overall, the process of training a neural network for NeRF involves a combination of data preparation, network architecture design, training, and evaluation to produce a highly accurate and efficient 3D representation of an object or environment.

By Vicuna-13b

Contribution

- An approach for representing continuous scenes with complex geometry and materials as 5D neural radiance fields, parameterized as basic MLP networks.

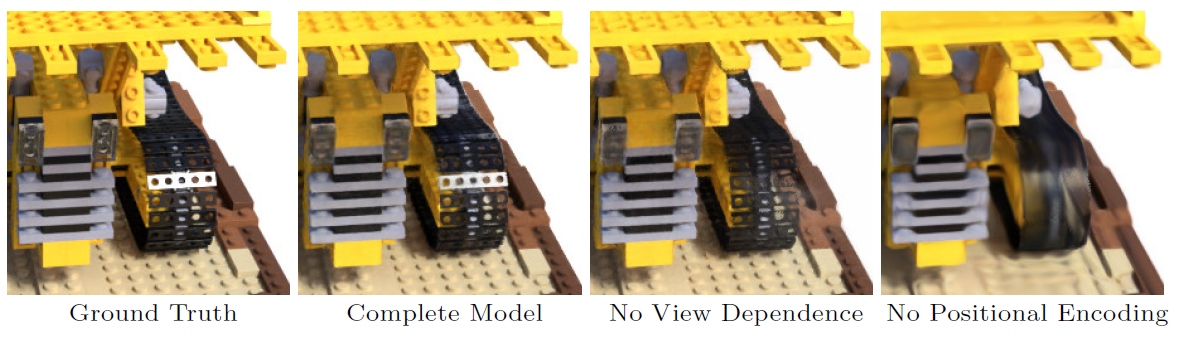

- A differentiable rendering procedure based on classical volume rendering techniques, which we use to optimize these representations from standard RGB images. This includes a hierarchical sampling strategy to allocate the MLP's capacity towards space with visible scene content.

- A positional encoding to map each input 5D coordinate into a higher dimensional space, which enables us to successfully optimize neural radiance fields to represent high-frequency scene content.

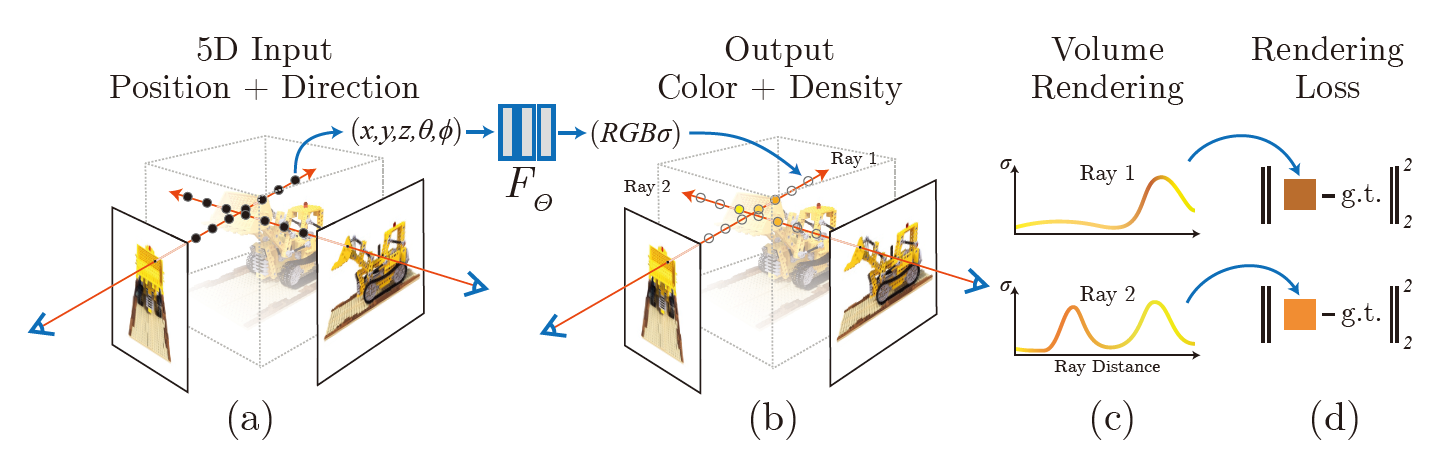

An overview of our neural radiance field scene representation and differentiable rendering procedure. Here g.t. represents the "ground truth", which means the real scene.

Overview of the Rendering Process

- March camera rays through the scene to generate a sampled set of 3D points.

- Use those points and their corresponding 2D viewing directions as input to the neural network to produce an output set of colors and densities.

- use classical volume rendering techniques to accumulate those colors and densities into a 2D image.

we can use gradient descent to optimize this model by minimizing the error between each observed image and the corresponding views rendered from our representation.

Neural Radiance Field Scene Representation

This is a method for synthesizing novel views of complex scenes by optimizing an underlying continuous volumetric scene function using a sparse set of input views. Our algorithm represents a scene using a fully-connected (non-convolutional) deep network

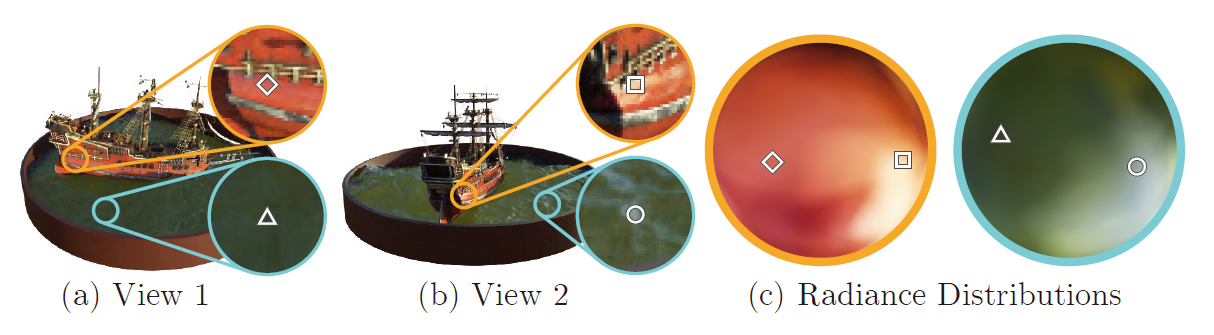

From Object to Scene: Volume Rendering with Radiance Fields

Our 5D neural radiance field represents a scene as the volume density and directional emitted radiance at any point in space.

We render the color of any ray passing through the scene using principles from classical volume rendering[1].

The volume density

The expected color

Example:

In (a) and (b), we show the appearance of two fixed 3D points from two different camera positions: one on the side of the ship (orange insets) and one on the surface of the water (blue insets). Our method predicts the changing appearance of these two 3D points with respect to the direction of observation

Discrete Sampling

Rendering a view from our continuous neural radiance field requires estimating this integral

we partition

From Scene to Object: Estimation of

This function for calculating

Implementation details

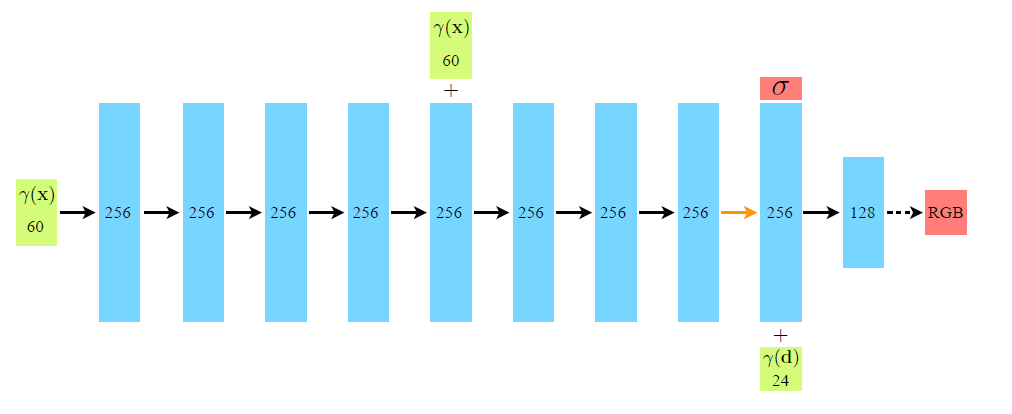

Network Architecture

- First

layers (ReLU): - Input: 3D coordinate

processed by - Output:

; 256-dimensional feature vector.

- Input: 3D coordinate

layer: - Input:

; 256-dimensional feature vector; Cartesian viewing direction unit vector processed by - Output: View-dependent RGB color

- Input:

Details of variables are in Improving Scenes of High Frequency.

Training

Datasets: Captured RGB images of the scene, The corresponding camera poses and intrinsic parameters, and Scene bounds (we use ground truth camera poses, intrinsics, and bounds for synthetic data, and use the COLMAP structure-from-motion package to estimate these parameters for real data)

Iteration: Randomly sample a batch of camera rays from the set of all pixels in the dataset following the hierarchical sampling

Loss: The total squared error between the rendered and true pixel colors for both the coarse and fine renderings

In our experiments, we use a batch size of 4096 rays, each sampled at

coordinates in the coarse volume and additional coordinates in the fine volume. We use the Adam optimizer with a learning rate that begins at and decays exponentially to over the course of optimization (other Adam hyper-parameters are left at default values of , , and ). The optimization for a single scene typically take around 100--300k iterations to converge on a single NVIDIA V100 GPU (about 1--2 days).

Notable Tricks

Improving Scenes of High Frequency

Deep networks are biased towards learning lower frequency functions.

findings in the context of neural scene representations, and show that reformulating

In the experiments, we set

Reducing the Cost with Hierarchical Sampling

Our rendering strategy of densely evaluating the neural radiance field network at

Instead of just using a single network to represent the scene, we simultaneously optimize two networks: one "coarse'' and one "fine''.

The coarse Network

Rewrite the alpha composited color as a weighted sum of all sampled colors

The fine Network

Normalizing

Conclusion

TODO

Explanations

[1] Volume rendering is a technique used in computer graphics and computer vision to visualize 3D data sets as 2D images. It works by slicing the 3D data set into a series of thin layers, and then rendering each layer as a 2D image from a specific viewpoint. These 2D images are then composited together to form the final volume rendering.

[2] If a distribution (here in 3D space) has a density

[3] Deterministic quadrature is a mathematical method used to estimate the definite integral of a function. The basic idea is to divide the area under the curve into smaller areas, and calculate the approximate value of the definite integral by summing the areas of the smaller areas. There are several types of deterministic quadrature methods, including the trapezoidal rule, Simpson's rule, and Gaussian quadrature.

[4] Alpha compositing is a technique used in computer graphics and image processing to combine two or more images or video frames by blending them together using an alpha channel. The alpha channel is a mask that defines the transparency or opacity of each pixel in the image. Alpha compositing is used to create composites, where the resulting image is a combination of the original images, with the transparency or opacity of each image controlled by the alpha channel. The alpha channel can be used to create effects such as blending, fading, and layering. Alpha compositing - Wikipedia

[5] Inverse transform sampling (ITS) is a technique used in digital signal processing to reconstruct a signal from a set of samples. It is the inverse of the discrete Fourier transform(DFT). The basic idea behind ITS is to use the Fourier coefficients obtained from DFT to reconstruct the signal in the time domain. Inverse transform sampling - Wikipedia